I have a confession to make: I’m addicted

to trip planning.

I

blame my first-World problem on two major reasons: curiosity and dynamic pricing. While curiosity might fuel my craving to

explore new places, dynamic pricing has taken this craving to a whole new level.

Long

gone are the days I would walk into a travel agency and accept what was given. Today, I spend days monitoring flight

prices online – always in the outlook of a good deal.

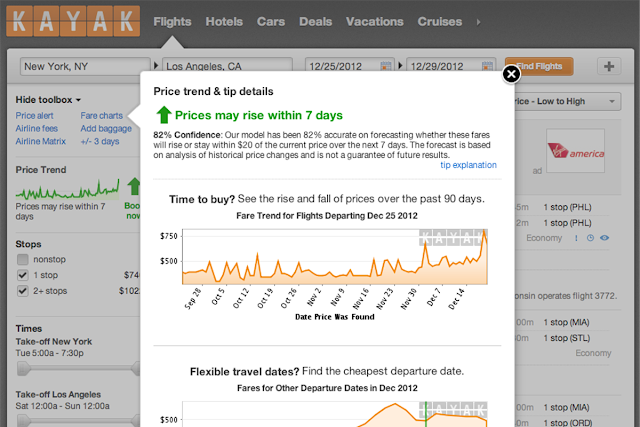

Early

2013, travel search engine Kayak introduced a fare-forecasting tool that allows travelers to assess whether the search prices will rise or fall

within the next week. This was the first time I began to wonder how the travel

industry could forecast demand and optimize its prices. What lies behind those flight purchase recommendations?

I

soon learned that Kayak uses large sets

of historical data from prior search queries and complex mathematical models to

develop its forecasting algorithm. I imagined that some of the input

variables include the current number of unsold seats, the date and time of the

booking (specially the number of days left until departure) and the current competition

on the same route. But what if Kayak also learned more about my personal

preferences based on my past behavior? If there were only one window seat left

on that flight, would it change its purchase recommendation from wait to buy? Would it be able to recommend me a trip, knowing I prefer

nature over cityscapes, am most likely to buy on Tuesdays and usually travel

over the weekend?

A

lot has been done on dynamic pricing since 2013, of course. One of the latest companies to join the

trend is AirBnB. Its hottest new feature,

Price Tips, helps hosts to easily

price their listings dynamically by using the company’s new open source Aersosolve machine learning tools. Their use of machine learning isn’t just limited to the

standard dynamic pricing, though: their

models automatically generate local neighbourhoods, rank images and learn about

AirBnB hosts’ preferences for accommodation requests based on their past

behaviour.

What does this mean for trip planning

addicts?

Well, I'm personally looking forward to the time when choosing my next travel destination is made simpler. I picture

personalised travel discovery and contextual insights - the kind that take into account both, your conscious and unconscious preferences. The Texas-based startup,

WayBlazer, already is tipping into this by employing IBM Watson cognitive

computing technology to listen and understand its customers and present considered,

tailored travel suggestions.

What could be next?